How to BLOCK Bingbot to save crawl budget

One day, our team started getting alerts that our client's website doesn't work. It was slow for customers.

We looked at the activity logs and saw that Bingbot was hammering the site:

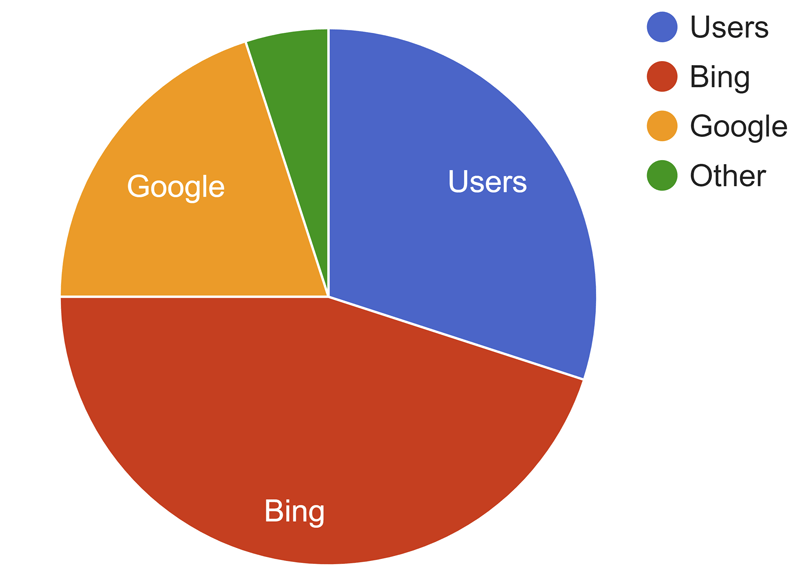

Distribution of website traffic

Roughly 30% Users, 50% Bing, 15% to Google and 5% Other.

Website crawlers usually have rate-limiting. Rate-limiting is a feature that makes them slow down if they start breaking the website. But it didn't seem like the Bingbot could sense that it's breaking the site for regular people. Or maybe it didn't work that well. The crawl didn't seem to slow down.

If it was my personal site, the solution would've been simple - just block the Bingbot user agent!

Who uses Bing anyway, right?

Well, people do – About 3%.

Our site was an eCommerce site that maximized SEO. Google was king, but there was still some $$ coming from Bing.

We had to find balance.

How to slow down the Bingbot

According to Microsoft, there are four settings that webmasters can use to control Bingbot's crawl speed:

| Crawl-delay setting | Speed |

|---|---|

| Not set | Normal 👀 |

| 1 | Slow |

| 5 | Very slow |

| 10 | Extremely slow |

We decided to slow down Bingbot's crawling, but still keep it present.

So we simply added this two-liner to the robots.txt file:

User-agent: bingbot

Crawl-delay: 10

Crawl-delay: 10 directive tells Bingbot to crawl the site "Extremely slowly."

Couple of days later, Bingbot listened:

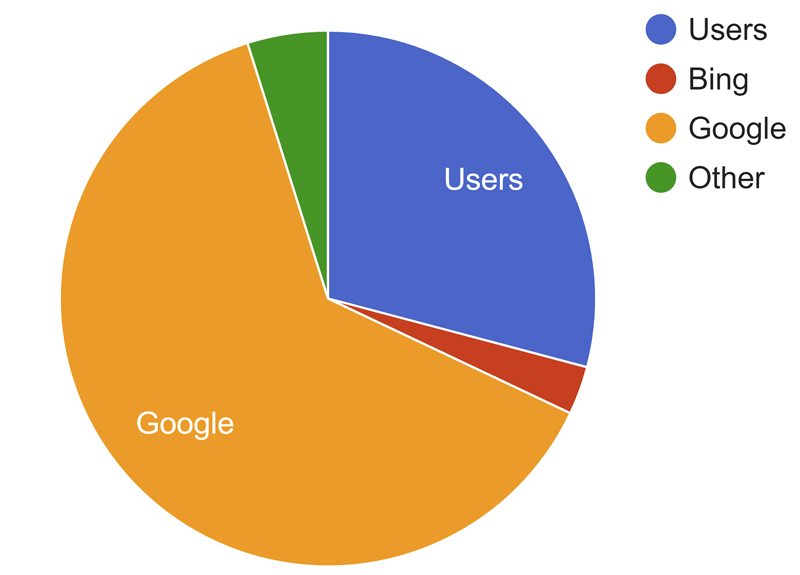

Distribution of website traffic after adding craw-delay for Bingbot.

Roughly 35% Users, 2% Bing, 60% to Google and 2% Other.

Get Google to crawl your site more

Great, Bingbot is down! But isn't the googlebot hammering the site now?

Googlebot is unique amongst crawlers because Google pays attention to load that it's putting on your server:

- If the site responds quickly, the crawl limit goes up

- If the site slows down or responds with a lot of HTTP 500 errors, the limit goes down, and Googlebot crawls less

Even though it was hitting the site more now, we didn't have trouble with the HTTP 500 errors that Bingbot was causing.

Control Google's Crawl Budget for your advantage

Slowing down Bingbot tremendously increased our crawl budget with Google.

Crawl budget is the number of pages Google will crawl on your site on any given day.

More crawling won't necessarily make you rank better on SERP, but it will give you better visibility by making sure crawlers get to your content quicker.

Increased visibility was an advantage for us because we knew we had great content that will rank well.

How to Block Bingbot

If you want to completely block Bingbot from crawling your site, add following lines to your robots.txt file:

User-agent: bingbot

Disallow: /

Josip Miskovic is a software developer at Americaneagle.com. Josip has 10+ years in experience in developing web applications, mobile apps, and games.

Read more posts →

I've used these principles to increase my earnings by 63% in two years. So can you.

Dive into my 7 actionable steps to elevate your career.