REST API Rate Limiting: Best Practices [2023]

Rate limiting is an important piece of REST API best practices.

Rate limiting is a technique used to control the rate at which a service processes incoming requests to ensure stability and prevent overuse.

At the end of this article, you'll be an expert on rate limiting!

- What is Rate Limiting?

- How does Rate Limiting work?

- Why add Rate Limiting to your API?

- How to decide on the rate limit?

- How to implement rate limiting?

- Is rate limiting necessary for my REST API?

- Is Rate Limiting the same as DDoS protection?

- Request size limit

- API Rate Limiting Algorithms

- Load Shedding and Rate Limiting

What is Rate Limiting?

Rate Limiting is a technique used to control the rate of incoming requests to an API.

Rate Limiting prevents overuse of the API so each user gets a fair share of the API's resources.

If you are building APIs for many consumers, rate limiting is crucial to help with the stability of the API.

Some systems that rate limiting helps protect include:

- Databases

- Network Infrastructure

- Application code

How does Rate Limiting work?

Rate limiting works by the backend server keeping track of the request count for each client.

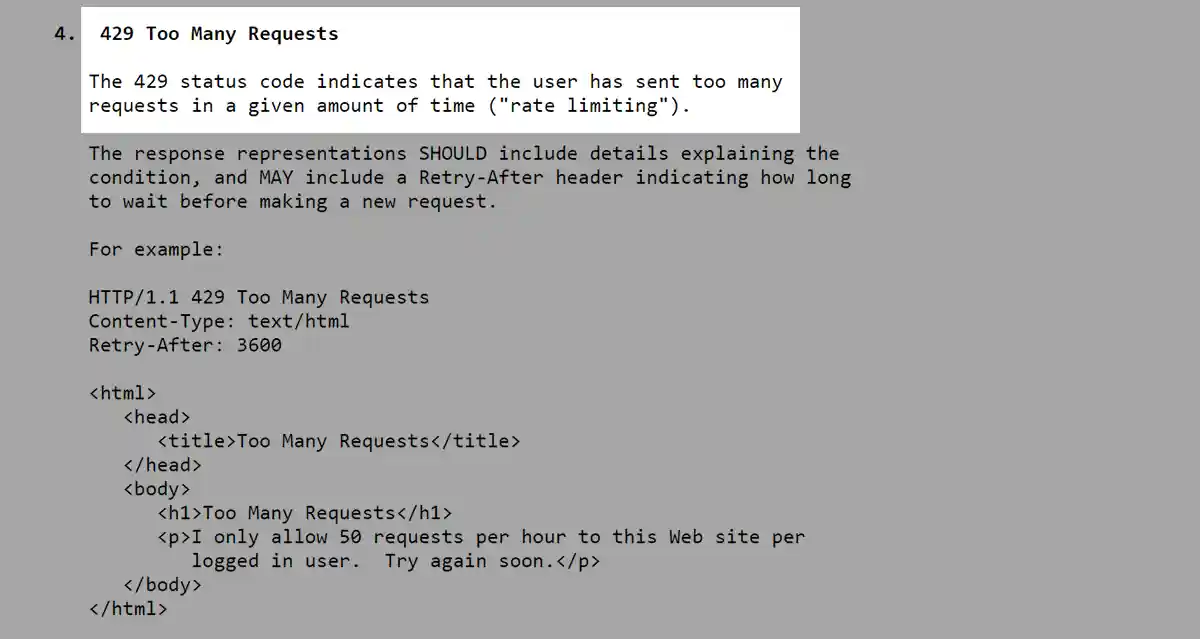

If a client hits the rate limit, for example, 30 requests per minute, the backend server sends the HTTP status code 429 "Too Many Requests", as defined in RFC6585.

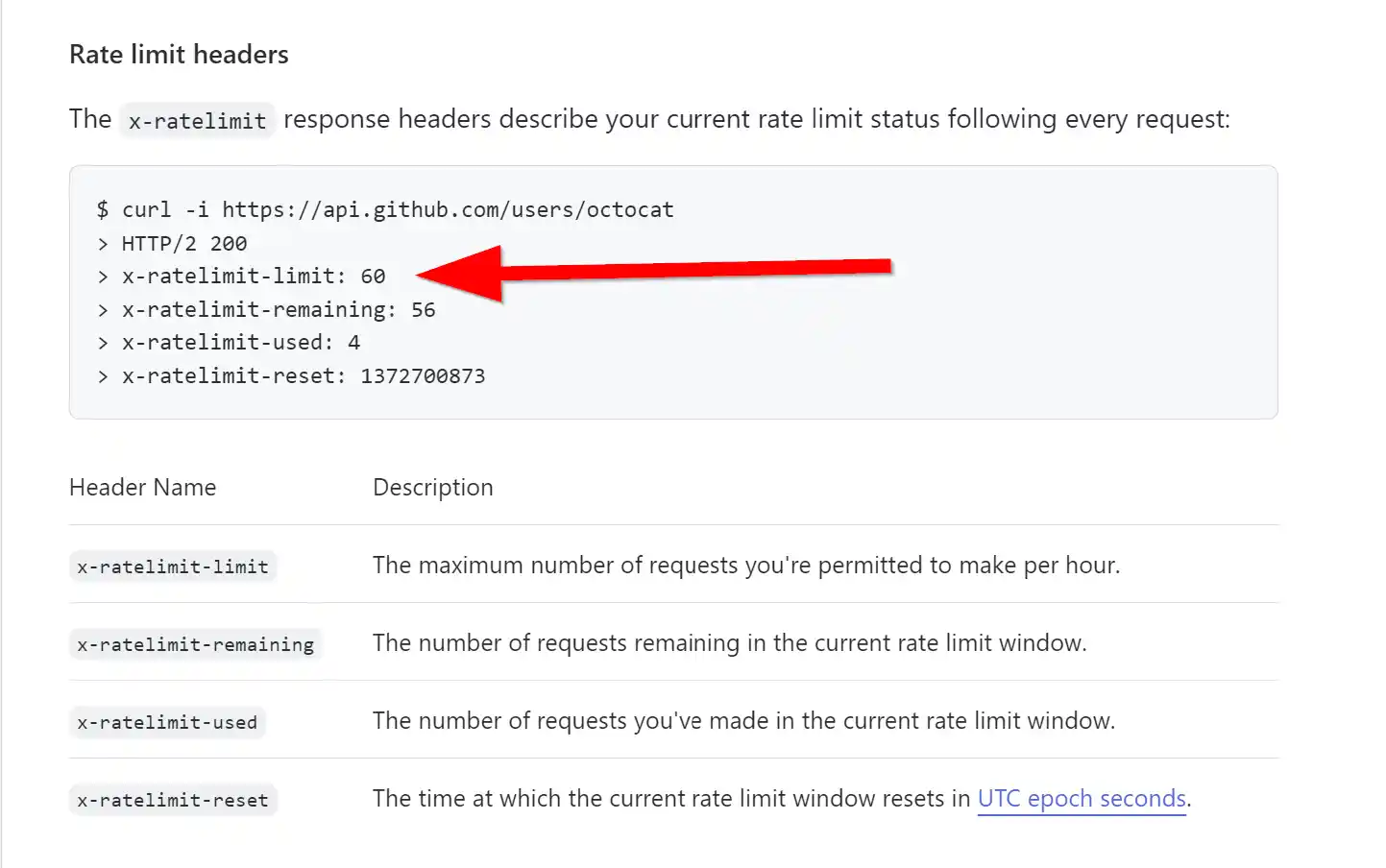

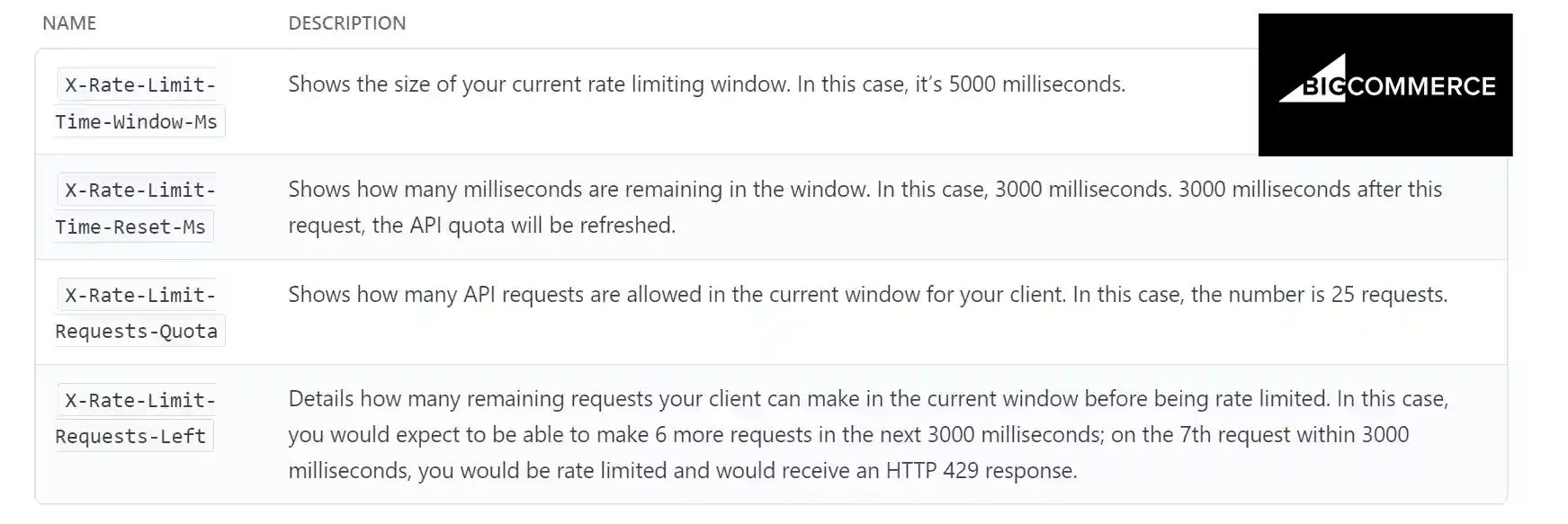

Rate-Limiting Headers

Rate-limiting headers are HTTP headers that are returned by a REST API in response to an API request.

These headers contain information about the rate-limiting policy being enforced by the API, including the number of requests remaining in the current rate-limiting window, and the time until the next window begins.

Rate-limiting headers are useful for API clients to establish error handling. They are a useful indicator to adjust the usage of the API or to implement client-side rate-limiting strategies to avoid exceeding the API's rate limits.

There is no widely adopted standard for rate-limiting headers. However, some commonly used headers for indicating rate-limiting information to clients include:

| Header Name | Description |

|---|---|

| X-Rate-Limit-Limit | The maximum number of requests allowed in a time window. |

| X-Rate-Limit-Remaining | The number of requests remaining in the current time window. |

| X-Rate-Limit-Reset | The time when the rate limit will be reset (in UTC or Unix timestamp). |

Rate Limiting Metrics

Rate limiting time window:

- requests per minute

- requests per hour

Rate limiting can also be set per:

- consumer (API authentication method, API key, IP Address)

- endpoint

- request type (GET, POST, PUT, DELETE, etc.)

Why add Rate Limiting to your API?

The main benefits of rate limiting are:

- Stability: Prevent overloading of the API server and ensures the stability of the API by limiting the number of requests from a single client.

- Fairness: Ensures fair distribution of resources by limiting the rate of requests from each client.

- Security: Protects the API from malicious or excessive usage, preventing potential security risks and preserving the integrity of the data.

- Monetization: Allows API providers to establish quotas - offer different service levels and pricing plans to clients based on their usage.

- Cost control: Prevents overcharges if the underlying API is priced based on usage.

How to decide on the rate limit?

To decide the appropriate rate limit for your API follow these steps:

-

Determine the max number of requests the API can handle: You need to determine the maximum number of requests that your API back end can handle per second, minute or hour. This will help you set realistic rate limits.

-

Define your SLA: Define the Service Level Agreement (SLA) you want to offer to your API consumers. The SLA will help you determine the desired response time for your API and, in turn, help you set appropriate rate limits.

-

Identify key use cases: Identify the key use cases for your API and prioritize them. This will help you determine which endpoints should be rate limited first.

-

Monitor API usage: Monitor your API usage regularly and collect data about the number of requests per endpoint, consumer, and time window. Use this data to determine your current rate limits.

-

Test and adjust limits: Test your rate limits and adjust them as needed. You can use the data collected from monitoring your API usage to make informed decisions about rate limits.

-

Provide feedback to consumers: Provide feedback to your API consumers about their usage, including the rate limit they have reached and when they can make their next request.

How to implement rate limiting?

There are multiple ways to implement rate limiting for your REST APIs. Some common methods include:

-

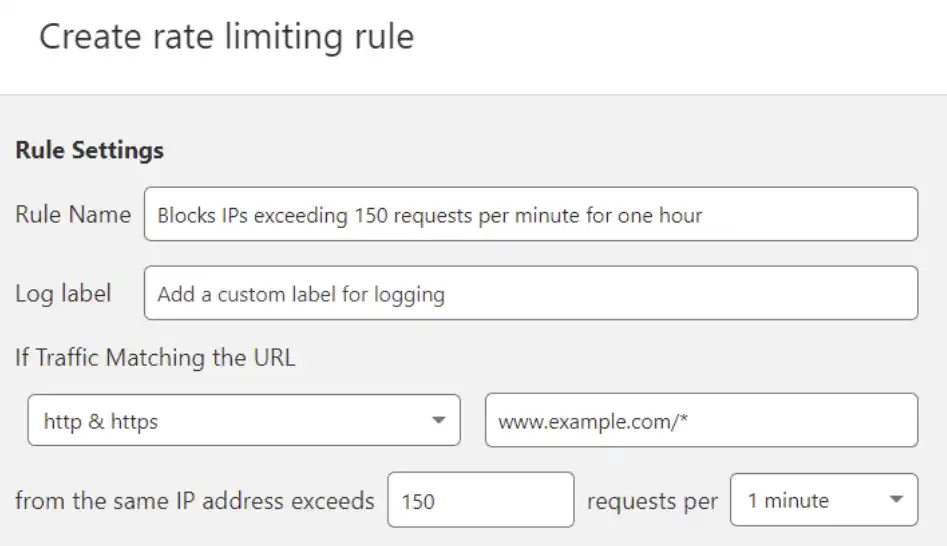

Web Application Firewall (WAF): Most WAFs, such as Cloudflare, offer rate limiting as part of web security services. You can set rate limits based on various factors such as IP address, URL, and request type.

-

API Management: Using an API Management solution such as AWS API Gateway, Azure API Management or Google Cloud Endpoints provides rate limiting functionality. These platforms allow you to define rate limits based on various factors, including the API key, endpoint, and time window.

-

Custom Implementation: You can implement rate limiting directly in your application code. This can be done by tracking the number of requests from each consumer and responding with an error status code when the limit is reached.

Is rate limiting necessary for my REST API?

No, rate limiting is not a necessary part of REST API design.

If you have a small API that servers a handful of B2B users, adding rate limiting is unnecessary.

However, if your REST API is intended to be used by a large number of consumers, or if it relies on limited resources, then rate limiting is a powerful tool you can use to ensure stability and prevent system overload.

Is Rate Limiting the same as DDoS protection?

Rate limiting and DDoS protection are different concepts.

Rate limiting is a technique used to manage the rate of incoming requests to an API or service, while DDoS protection is a security measure aimed at protecting against distributed denial-of-service attacks.

Rate limiting is used to control access to an API by limiting the number of requests that can be made by a single user or client within a specified period, while DDoS protection is used to prevent malicious actors from overwhelming a server or network with a large volume of traffic, disrupting normal service.

Rate limiting can help mitigate the impact of DDoS attacks, but it is not a substitute for DDoS protection.

Even APIs protected by rate limiting can be victims of DDoS attacks. A DDoS attack can overwhelm the rate-limiting mechanism itself.

Request size limit

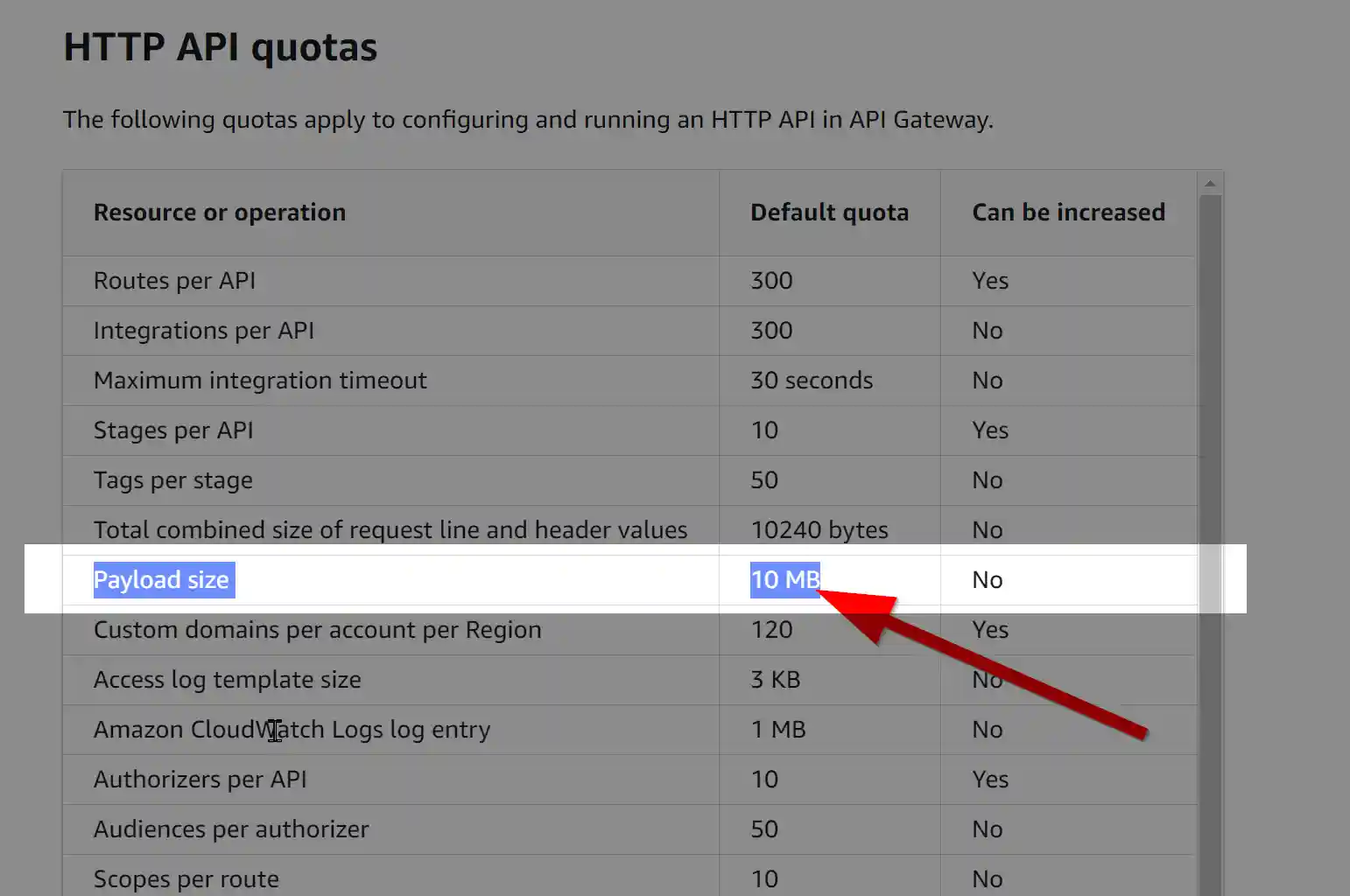

Some REST APIs enforce request size limits as a form of rate limiting.

Request size limit enforces a maximum allowed size for the request payload, usually measured in kilobytes. The limit is usually set to prevent clients from sending large amounts of data that may overburden the server, consume excessive bandwidth, or cause other performance issues.

API Rate Limiting Algorithms

Leaky Bucket

The Leaky Bucket algorithm is a rate limiting technique that manages incoming requests to a server by forcing them into a buffer (the "bucket", FIFO) of a limited size. When the buffer is full, new requests are ignored until there is room in the buffer again. This algorithm aims to distribute incoming requests evenly over time, preventing the server from being overwhelmed by spikes in traffic.

Fixed Window

The Fixed Window algorithm is a type of rate limiting algorithm that restricts the rate at which requests are processed by defining a fixed time window during which a limited number of requests can be made. After the time window has passed, the counter is reset and the process starts over. This type of algorithm is simple to implement and is often used in real-time systems where the rate of incoming requests must be limited to prevent overloading.

This technique doesn't prevent bursts of requests. For example, if the rate limit is 5000 requests per hour and the client makes 5000 requests in the first minute and then none for the next 59 minutes, the Fixed Window algorithm will allow the requests to go through, but in a real-world scenario with sudden spikes in traffic, the server might not be able to handle the sudden increase in requests.

Sliding Log

Sliding Log is a rate limiting algorithm that counts the number of requests made in a sliding window of time.

In this algorithm, the server keeps track of reuests in a log data structure. A new window is created every time a certain amount of time has elapsed. The window is "sliding" because as time moves, older requests are removed from the log, and only the most recent requests are considered for rate limiting.

The disadvantages of the Sliding Log algorithm include a higher implementation complexity compared to other algorithms, and a need for continuous storage of the request log.

Sliding Window

The Sliding Window algorithm combines the best of Fixed Window and Sliding Log algorithms.

It uses a cumulative counter for a set period to assess each request and smooth out bursts of traffic.

This algorithm is ideal for processing large amounts of requests as it is light and fast to run. The time window for rate limiting can vary depending on the needs and use case of the API and can reset after a certain period has passed, such as every hour or every day.

503 vs 429 Status Code

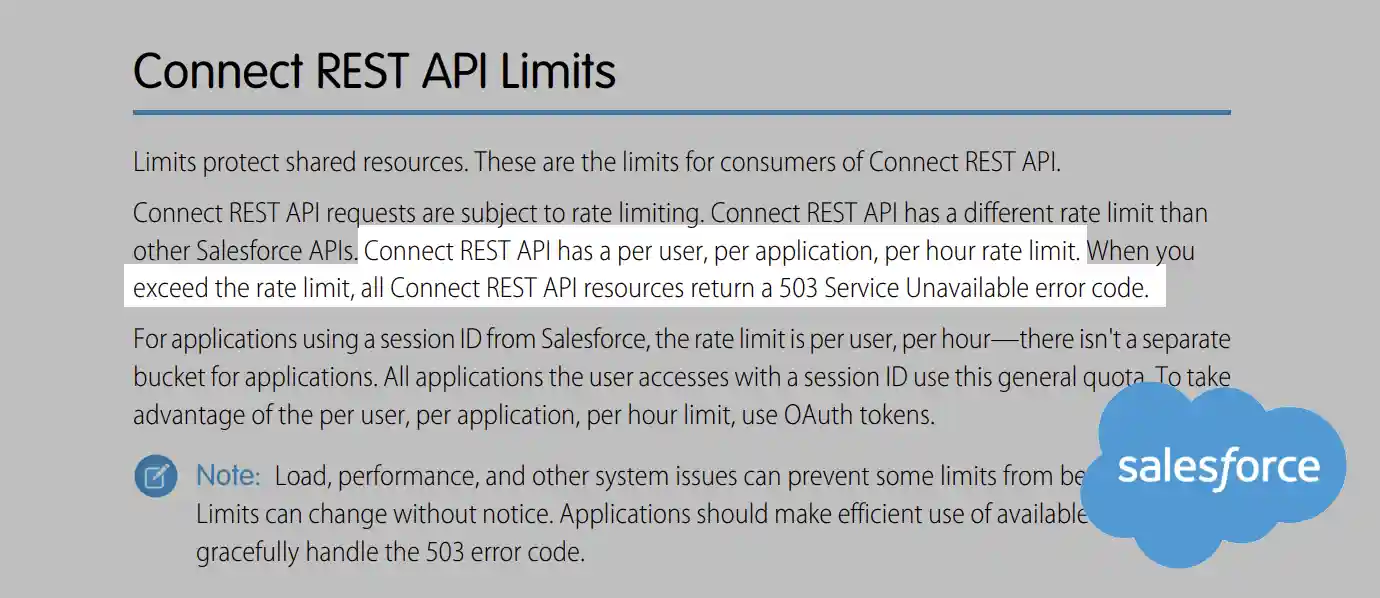

Rate limiting is mostly communicated using HTTP status codes 429 or 503.

Some systems use 503 Service Unavailable status code for rate limiting instead of 429 Too Many Requests because 429 was only introduced in 2012 with RFC6585. For example, AWS still uses 503 instead of 429.

Going forward, all new APIs should be using 429 Too Many Requests.

Load Shedding and Rate Limiting

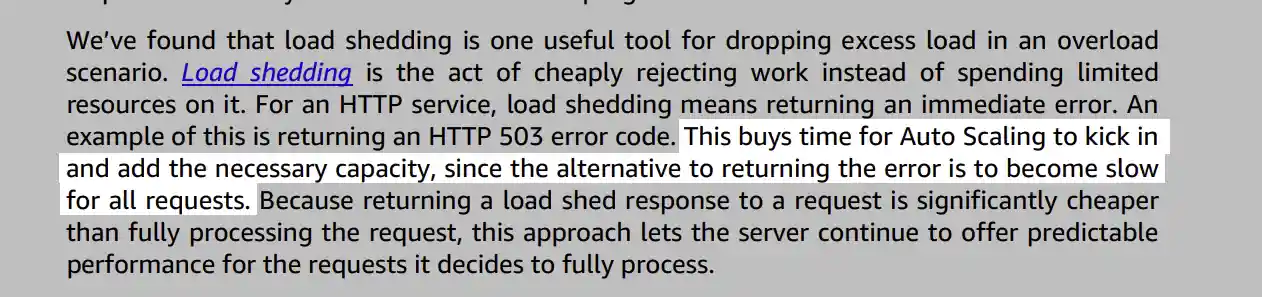

Load shedding is a method used to deal with an increased workload on a system. When the system becomes overwhelmed and can't handle the increased demand, load shedding allows the API to reject calls instead of slowing down and processing them.

Load shedding helps to maintain consistent performance and prevent a ripple effect that could spread to other systems. Load shedding is done by returning an error code, such as an HTTP 429 error, which tells the client that the server is unable to process the request at this time.

Amazon uses rate limiting for AWS products to achieve load-shedding:

Josip Miskovic is a software developer at Americaneagle.com. Josip has 10+ years in experience in developing web applications, mobile apps, and games.

Read more posts →- What is Rate Limiting?

- How does Rate Limiting work?

- Why add Rate Limiting to your API?

- How to decide on the rate limit?

- How to implement rate limiting?

- Is rate limiting necessary for my REST API?

- Is Rate Limiting the same as DDoS protection?

- Request size limit

- API Rate Limiting Algorithms

- Load Shedding and Rate Limiting

I've used these principles to increase my earnings by 63% in two years. So can you.

Dive into my 7 actionable steps to elevate your career.